After logging the incidents that occur in the field or after deployment of the system we also need some way of reporting, tracking, and managing them. It is most common to find defects reported against the code or the system itself. However, there are cases where defects are reported against requirements and design specifications, user and operator guides and tests also.

Why to report the incidents?

There are many benefits of reporting the incidents as given below:

- In some projects, a very large number of defects are found. Even on smaller projects where 100 or fewer defects are found, it is very difficult to keep track of all of them unless you have a process for reporting, classifying, assigning and managing the defects from discovery to final resolution.

- An incident report contains a description of the misbehavior that was observed and classification of that misbehavior.

- As with any written communication, it helps to have clear goals in mind when writing. One common goal for such reports is to provide programmers, managers and others with detailed information about the behavior observed and the defect.

- Another is to support the analysis of trends in aggregate defect data, either for understanding more about a particular set of problems or tests or for understanding and reporting the overall level of system quality. Finally, defect reports, when analyzed over a project and even across projects, give information that can lead to development and test process improvements.

- The programmers need the information in the report to find and fix the defects. Before that happens, though, managers should review and prioritize the defects so that scarce testing and developer resources are spent fixing and confirmation testing the most important defects.

While many of these incidents will be user error or some other behavior not related to a defect, some percentage of defects gets escaped from quality assurance and testing activities.

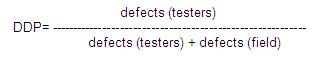

The defect detection percentage, which compares field defects with test defects, is an important metric of the effectiveness of the test process.

Here is an example of a DDP formula that would apply for calculating DDP for the last level of testing prior to release to the field:

Often, it aids the effectiveness and efficiency of reporting, tracking and managing defects when the defect-tracking tool provides an ability to vary some of the information captured depending on what the defect was reported against.

Often, it aids the effectiveness and efficiency of reporting, tracking and managing defects when the defect-tracking tool provides an ability to vary some of the information captured depending on what the defect was reported against.

Other popular articles:

- What is Incident logging Or How to log an Incident in software testing?

- What is Defect or bugs or faults in software testing?

- What is Incident management tools?

- How to write a good incident report in software testing?

- What is a Failure in software testing?

Leave a Reply